| EasyChair Smart Slide | User Guide/Log in |

| Home |

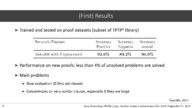

Most modern first-order theorem provers rely on methods like Superposition [1] and Resolution [5], where implications of a clause set are computed to eventually generate the empty clause. The chronological order of computing inferences is a key aspect of proof search and is crucial for the system’s performance. It is typically controlled by a clause selection heuristic. For the task of clause selection, we present a novel heuristic based on artificial neural networks. Deep Learning has demonstrated significant success in several domains [3, 4]. In our approach, clauses are first embedded by a vocabulary that is learned during training and shares features among similar symbols. Compression of clauses to a fixed size is implemented by combining the concepts of dilated convolutions [8] and a dense block [2] to ensure a broad context. The features of a clause to be evaluated are combined with features of the negated conjecture and of the original axiom set, and are processed by a small classifier network. The training is optimized by extensive negative mining and a novel loss function. The heuristic is integrated and tested in the state of the art theorem prover E [6, 7]. In experiments, the network correctly classifies over 90% of all clauses in the test data set with respect to the question if they are useful for the proof or not. However, despite hardware acceleration, the Deep Learning based evaluation currently is several orders of magnitude slower than conventional heuristics, and hence only one from a sample of 25 new proof problems can be solved with the developed technique, highlighting the challenge for future work.